LiA Showcase : Drones in Disaster

This summer, I worked for 6 weeks with a non-profit organisation called FlyingLabs, a subsidiary of WeRobotics, as part of my Leadership-in-Action project. The objective of this charity is to democratise technology, sharing the knowledge and benefits of technology with all people. In practice the charity partners with communities and local experts to use cutting edge technology in robotics and UAV technology to solve local issues. Importantly these include issues that may not have a more traditional profit incentive and, as a result, are being neglected by “the market”. This charity has branches all around the world, but primarily in the global south where the need is the greatest.

Autonomous vehicles are composed of many different complex and pain stakingly engineered components. They need sensors and cameras to perceive the world around them. They need lightweight yet robust software to process and understand that data. They need agile path planning algorithms to help them navigate their environment. And each of these components are incredibly difficult to design and build.

One such component in these vehicles is the mapping and localisation system. Essentially this module is there to answer the questions of "where am I?" and "what's around me?". I'm sure you can imagine plenty of examples where this question is of great importance. A drone may need to localise before delivering supplies to a village or a home in need. A automotive vehicle may need to track the objects around it, even when they're out of view. Even a autonomous vacuum needs to know where it has visited already!

The example I focussed on in my project relates to drones and their use in disaster relief. Micro air vehicles ( MAVs ) are being use more and more in these contexts. These are lightweight, agile, often low-resource drones. They typically are much cheaper than their better performing counterparts, making them much more accessible and reasonably capable of sending on high-risk missions. Importantly, technology is being developed to control "swarms" or groups of these drones. The intelligence found in these swarms, may be significantly more useful to an engineer than that found in a single high-performance robot.

One use of these swarms is in rapid-response disaster relief. If a building has collapsed a swarm of drones can look for survivors in a fraction of the time that it would take a rescue party. Moreover, these drones can explore much more dangerous areas. Development is quickly being done in the field of fire rescue drones and their potential deployment alongside humans. These drones should be able to rapidly explore a building, map out the area, and locate persons in need of rapid assistance. For my project, I worked with the team to build the mapping part of this system, as well as create some guiding documentation for the team that would outline the path to deployment for these systems.

The first week of my project consisted largely of investigating the problem. I was reading papers and exploring startups in the space. I also spent some time exploring the problem itself and how it related to the technology. At the end of the week, I felt I had clarified the issue in my head and understood where to go with the technology.

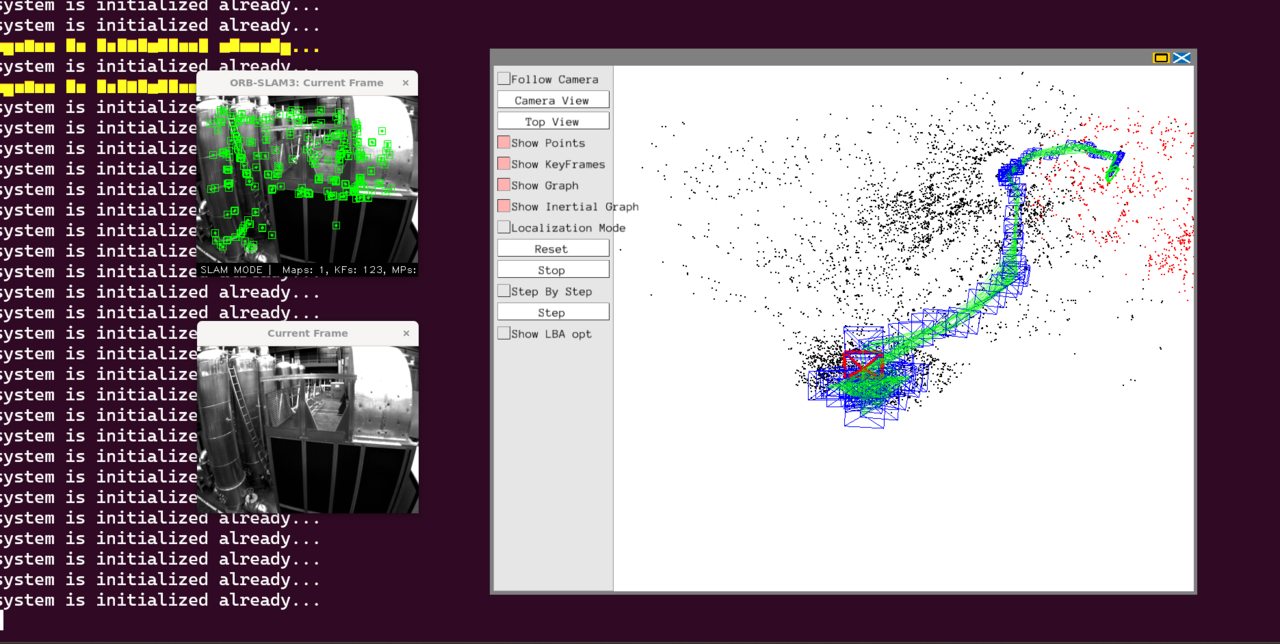

For the second week, I performed some "proof of concept" experiments for the system. I decided to target the Crazyflie drone for this project. This was largely motivated by it's low cost and open-source software and firmware. I tried to recreate the images that would be received from the drones. I then fed these into a commonly used Simultaneous Localisation and Mapping ( SLAM ) algorithm, ORB-SLAM. I performed some tests over a popular MAV dataset and recorded the results.

For the third and fourth weeks, I worked with the team to resolve an issue we had come across during the experiments. The algorithm performed poorly in low light conditions, which would be common in a fire rescue scenario. To compensate for this we took a different approach that used a convolutional neural network ( CNN ) based feature extractor instead of the more traditional approach. We build this system over the two weeks, testing it on the same dataset as the weeks prior.

For the fifth week, I performed some more tests to compare the CNN based approach to the more traditional approach. Although not that exciting, this data was very important to those at FlyingLabs as it would inform the design of this system after I returned home.

The final week was when I documented my work over the whole project. I created documentation the software I created during the project, as well as some documentation illustrating "where to go from here". During this week I started drafting the guiding document for the deployment of these systems as well. This document outlined the current drone legislation, especially as it applies to autonomous drones. These documents represented the culmination of six weeks of exploration into this application, as well as the years previous that I have spent developing these technologies. These suggestions should give the FlyingLab Madrid team a clear pathway to develop this technology further, while also saving them weeks of research and development time in the future.

Please sign in

If you are a registered user on Laidlaw Scholars Network, please sign in